Artificial Intelligence (AI) is no longer something we only see in movies—it’s a real part of our daily routines. Think about it: Netflix recommending your next favorite show, Google Maps finding the fastest route, or chatbots answering questions on websites. These are all AI at work, quietly making life easier. But with this rapid growth comes an equally important conversation: AI ethics developments and challenge.

AI isn’t just about speed, convenience, or cool features—it’s about whether we can trust it. Can we be sure the decisions AI makes are fair, that our privacy is protected, and that someone takes responsibility when things go wrong? These aren’t just technical concerns—they’re ethical ones.

That’s why it’s important to step back and ask: Can the use of AI truly be ethical? To answer that, let’s explore why AI ethics matters, the principles guiding it, the challenges we face, and the steps being taken globally to build AI that works responsibly for everyone.

Why AI Ethics Matters

Imagine applying for a loan, and instead of a human reviewing your case, an algorithm decides whether you’re approved. Or picture a self-driving car making a split-second decision in an accident. Now ask yourself:

- Was the decision fair?

- Was your personal data handled responsibly?

- If something goes wrong, who is accountable?

These aren’t just technical questions—they are ethical ones.

At its core, AI ethics developments and challenge revolve around ensuring AI systems act in ways that are fair, transparent, and beneficial to humanity. Without ethical guardrails, AI risks amplifying existing biases, threatening privacy, and creating decisions that are difficult to question or reverse.

You may also like to read this:

Best Upcoming AI Tools For Productivity In 2025 Guide

Top 15 Latest Green Technology Innovations To Watch Now

Top 8 Blockchain Applications Beyond Cryptocurrency Today

Future Applications of Quantum Computing Explained Guide

How The Impact of 5G on Gadgets Is Changing Technology

Key Principles of AI Ethics

To make AI trustworthy, several ethical principles guide its development and use:

1. Fairness and Non-Discrimination

AI should not reinforce or worsen societal inequalities. For example, an AI recruitment tool must avoid favoring one gender or race due to biased training data.

2. Transparency

AI should not operate like a “black box.” Users deserve to know how decisions are made and what factors influenced the outcome.

3. Accountability

AI systems don’t exist in isolation; humans design and deploy them. When something goes wrong, there should always be a clear line of responsibility.

4. Privacy Protection

Since AI relies on personal data, protecting that data from misuse, surveillance, or unauthorized access is critical.

5. Beneficence

AI must be developed to benefit humanity—not just to maximize profits for corporations. It should enhance well-being, improve efficiency, and serve society.

While these principles sound straightforward, implementing them in real-world AI systems is where the real struggle lies.

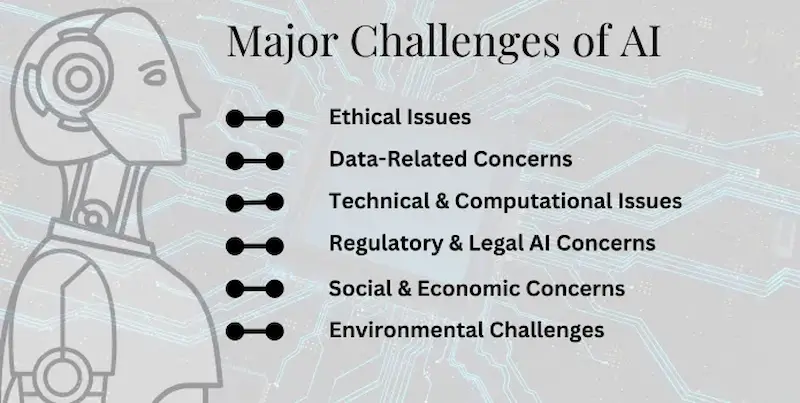

Major Challenges in AI Ethics

Here are some of the pressing AI ethics developments and challenge areas experts are currently grappling with:

1. Bias in AI Systems

AI systems learn from data—but data reflects human history, including its inequalities. For example, facial recognition software has been found to be less accurate for people with darker skin tones. These biases can lead to unfair and even harmful outcomes if not addressed.

2. Lack of Transparency

Many advanced AI systems, especially deep learning models, function in ways that even their creators can’t fully explain. This “black box” problem makes it hard to build trust. If a system can’t explain its decisions, how can users rely on it?

3. Accountability Gaps

If a self-driving car causes an accident, who should take responsibility—the manufacturer, the software developer, or the car owner? Current laws don’t provide clear answers, leaving major accountability gaps.

4. Privacy Concerns

AI thrives on massive amounts of personal data, from browsing habits to health records. Without strong safeguards, this creates opportunities for surveillance, hacking, and unethical use of sensitive information.

5. Global Regulation Challenges

AI is global by nature, but regulations vary from one country to another. While the EU pushes for strict ethical rules, some regions prioritize innovation over regulation. Creating a global standard is one of the toughest challenges in AI governance.

Recent Developments in AI Ethics

The good news is that governments, researchers, and corporations are taking AI ethics seriously. Some key AI ethics developments include:

- Establishing Ethical Guidelines: The European Union introduced the AI Act, one of the world’s first comprehensive AI regulations, focusing on risk management and human oversight. Similarly, UNESCO has released a global AI ethics framework to encourage responsible AI adoption.

- AI Auditing Tools: Researchers are creating tools that can detect, measure, and fix bias in AI models, ensuring more fairness in decision-making.

- Corporate Responsibility: Tech giants like Google, Microsoft, and IBM are setting up internal ethics boards and publishing AI transparency reports to reassure the public.

- Rising Public Awareness: People are asking tougher questions about how AI affects their privacy, job security, and freedom. This growing pressure is forcing governments and companies to take ethical concerns more seriously.

Why Everyone Has a Role to Play

AI ethics isn’t just a topic for developers, policymakers, or tech experts—it affects all of us. Whether you’re a student using AI chatbots, a business owner adopting AI for marketing, or simply someone scrolling through AI-curated social media feeds, you are impacted by how AI is built and used.

Being aware of AI ethics developments and challenge empowers you to:

- Question the fairness of AI systems.

- Demand accountability from companies.

- Advocate for stronger regulations.

- Use AI responsibly in your own daily life or work.

Final Thoughts

AI is here to stay, and it’s only going to grow smarter and more influential. But with power comes responsibility. Understanding AI ethics developments and challenge isn’t just about keeping up with technology—it’s about shaping a future where AI enhances humanity instead of harming it.

The question is not whether AI will transform the world—it already has. The real question is: Will we guide it ethically, or let it run unchecked?

So, the next time you interact with Siri, Alexa, or any AI-powered system, remember: the ethical choices behind that technology matter just as much as the convenience it provides.

FAQs

1. What does AI ethics mean?

AI ethics refers to the principles and guidelines that ensure artificial intelligence is designed and used responsibly. It focuses on fairness, accountability, transparency, and protecting human rights.

2. Why is AI ethics important?

Because AI impacts decisions in healthcare, finance, hiring, law enforcement, and more. Without ethical frameworks, AI could reinforce discrimination, invade privacy, or make unaccountable decisions.

3. What are the biggest challenges in AI ethics?

The major challenges include bias in AI systems, lack of transparency, accountability gaps, privacy issues, and inconsistent global regulations.

4. How are governments addressing AI ethics?

Governments are developing regulations like the EU AI Act, while organizations like UNESCO promote global ethical guidelines. Many countries are also funding AI research with an ethics-first approach.

5. Can AI ever be 100% ethical?

Probably not—because AI reflects the data and values of the humans who build it. However, with strong ethical frameworks and regulations, AI can be made more trustworthy and less harmful.